This is 1 of 4 UX projects conducted during my time as the User Experience Librarian Intern at University of Toronto Scarborough (UTSC) Library.

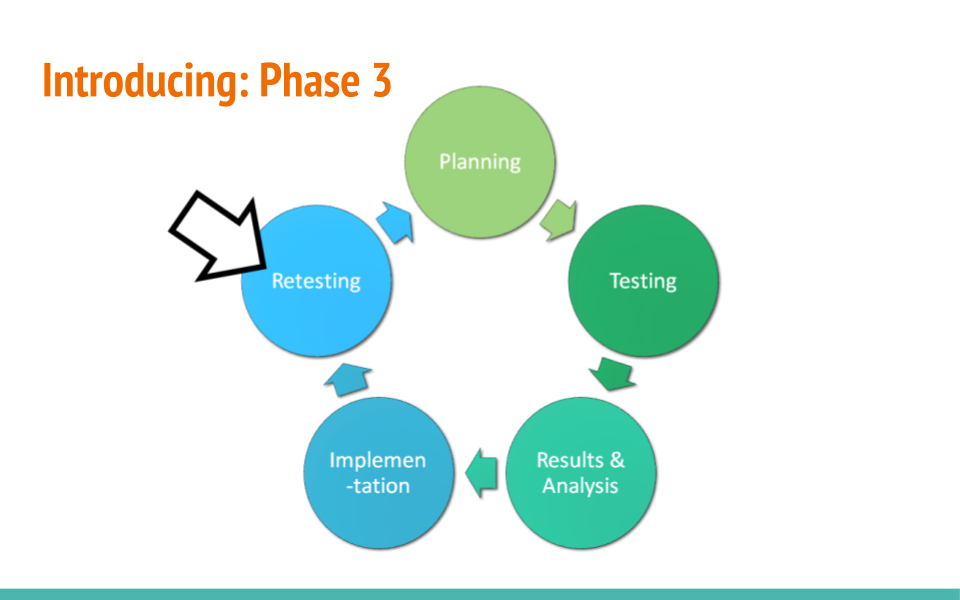

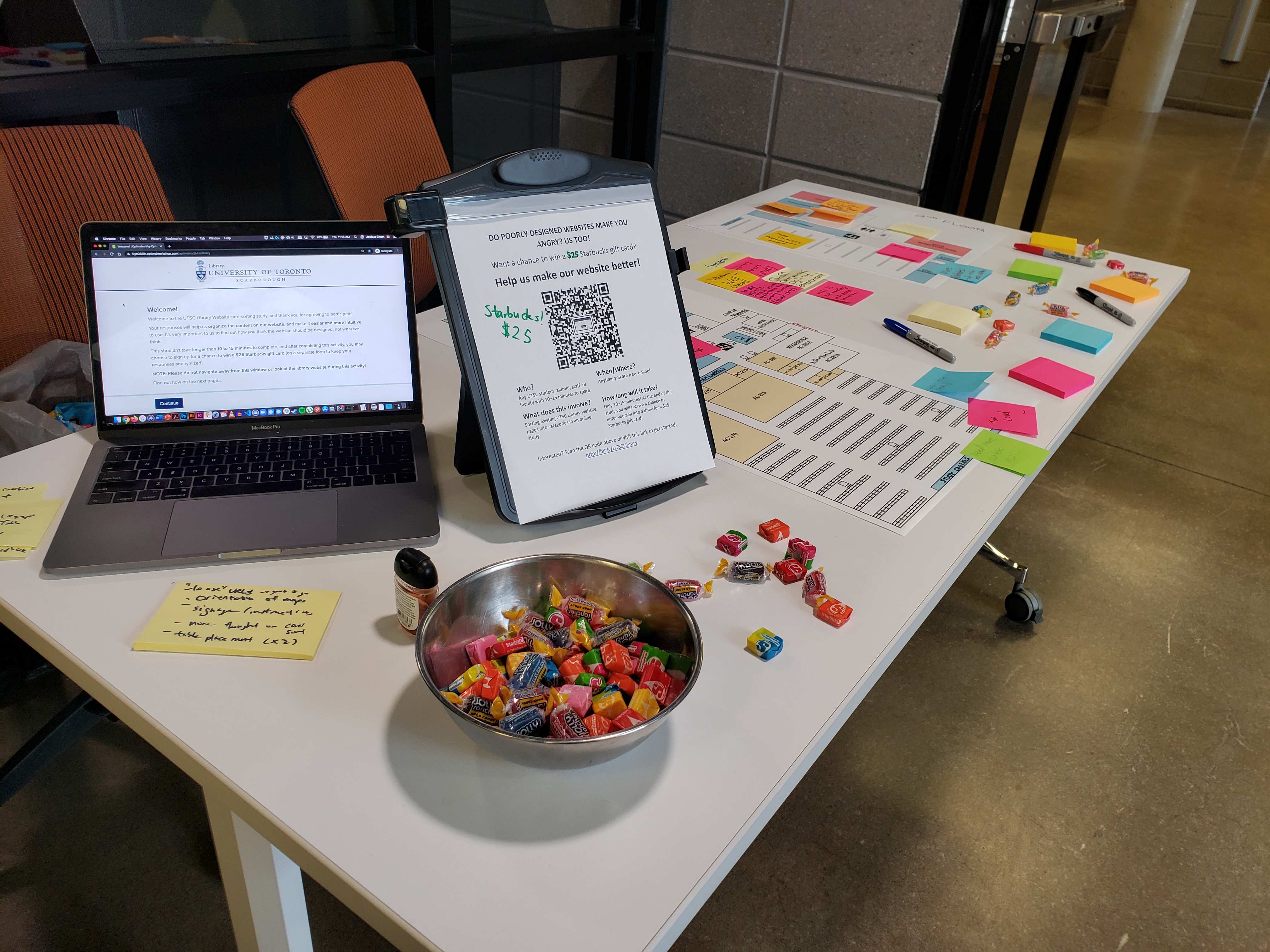

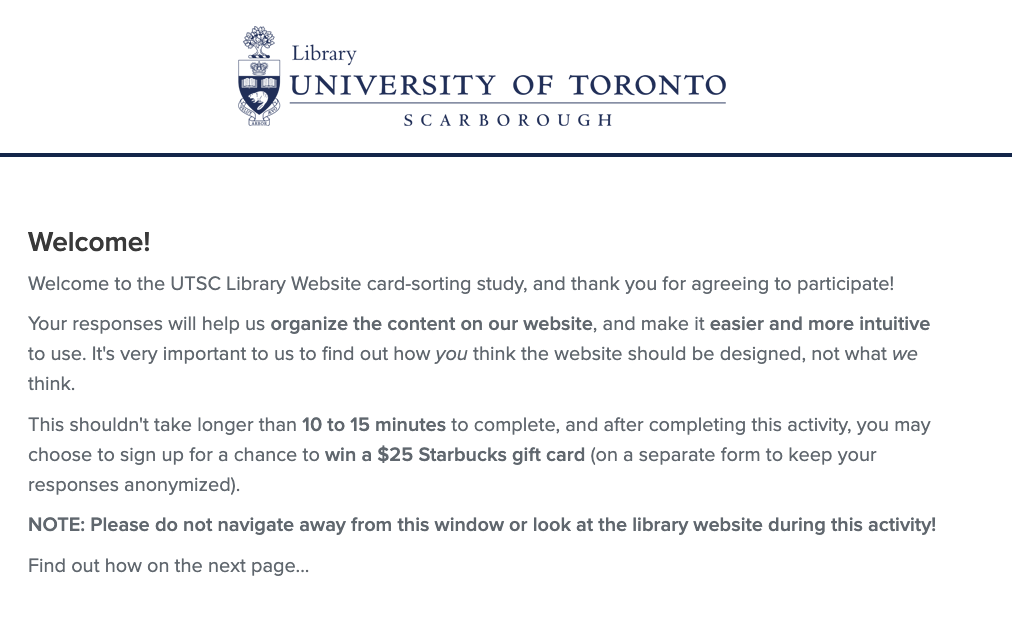

As part of an ongoing process to facilitate user-centred services and spaces at the University of Toronto Scarborough (UTSC), I partnered with the Web & User Experience (UX) Librarian to conduct Phase 3 of the UTSC Library Website redesign project. In the spirit of design thinking, library spaces and services should undergo iterative testing after implementation.

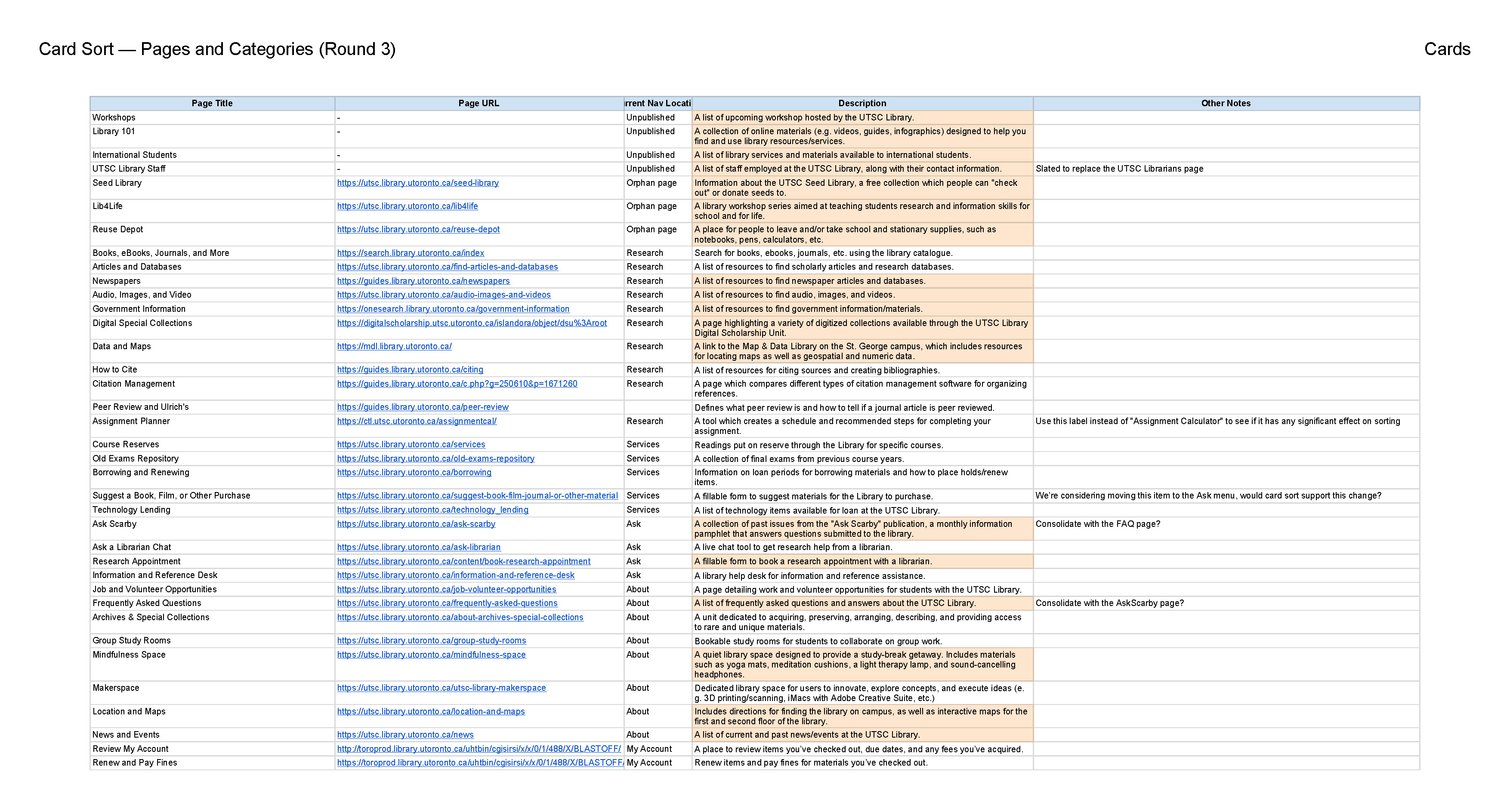

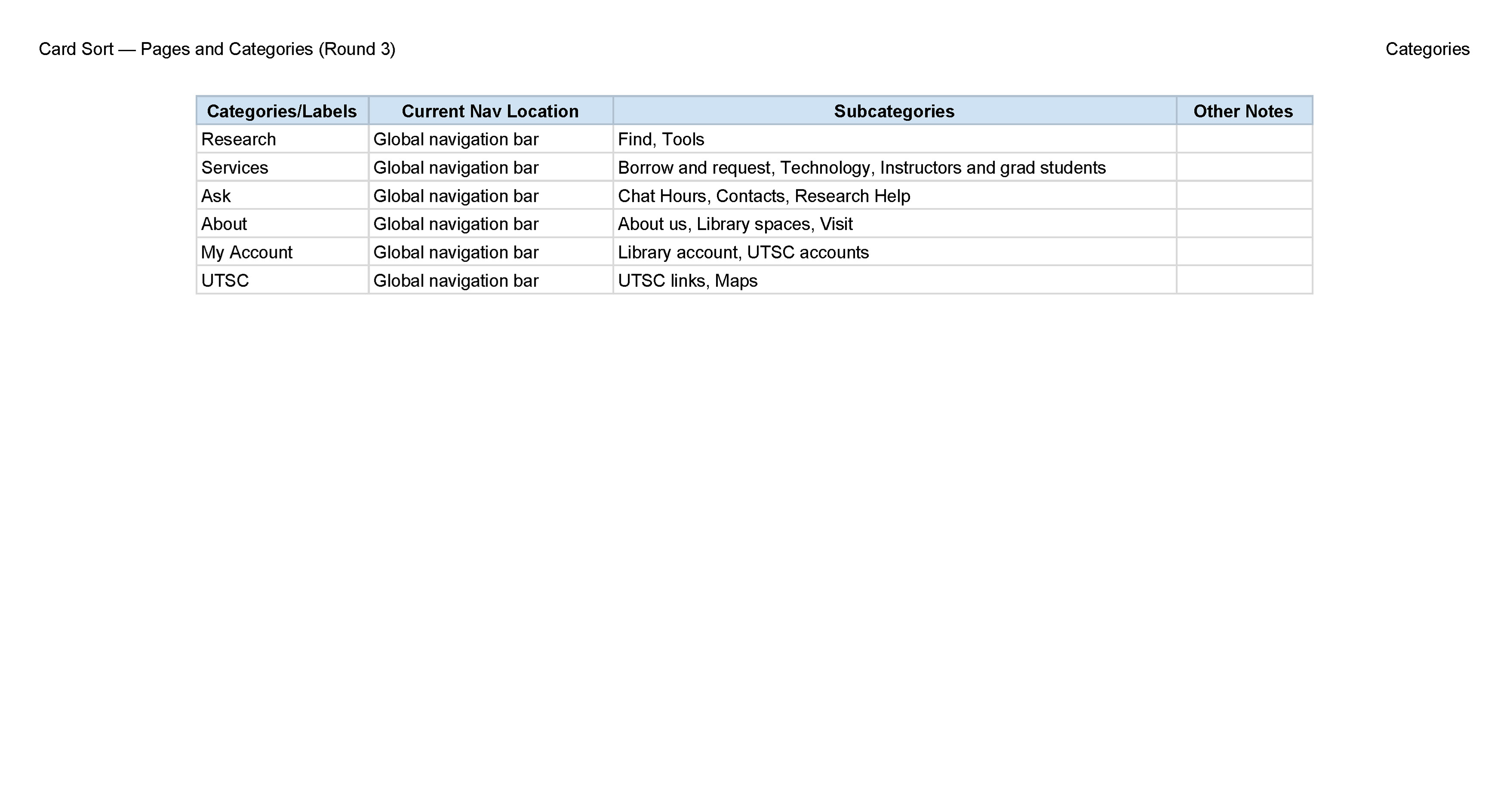

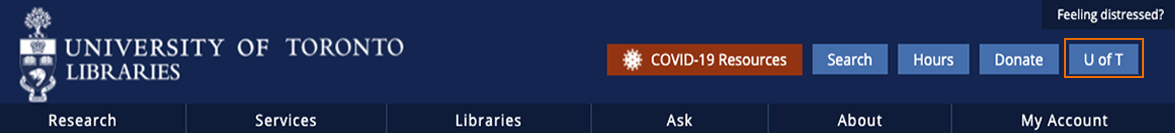

Previously, Phase 1 (Summer 2016) and Phase 2 (Summer 2018) involved physical card-sorting and task-based usability testing conducted with undergradute students at UTSC. These led to several postive information architecture changes, including the following:

- Revised UTL course reserves module (prioritizing course code > course name/instructor).

- Recategorizing pages (e.g. "Old Exams Repository" moved from Research to Services).

- Combined top-level Visit and About menu categories

- Added new sub-menus (e.g. Technology).

- Eliminated unclear or unnecessary sub-menus (e.g. Collections, Manage your content, and Use our spaces).

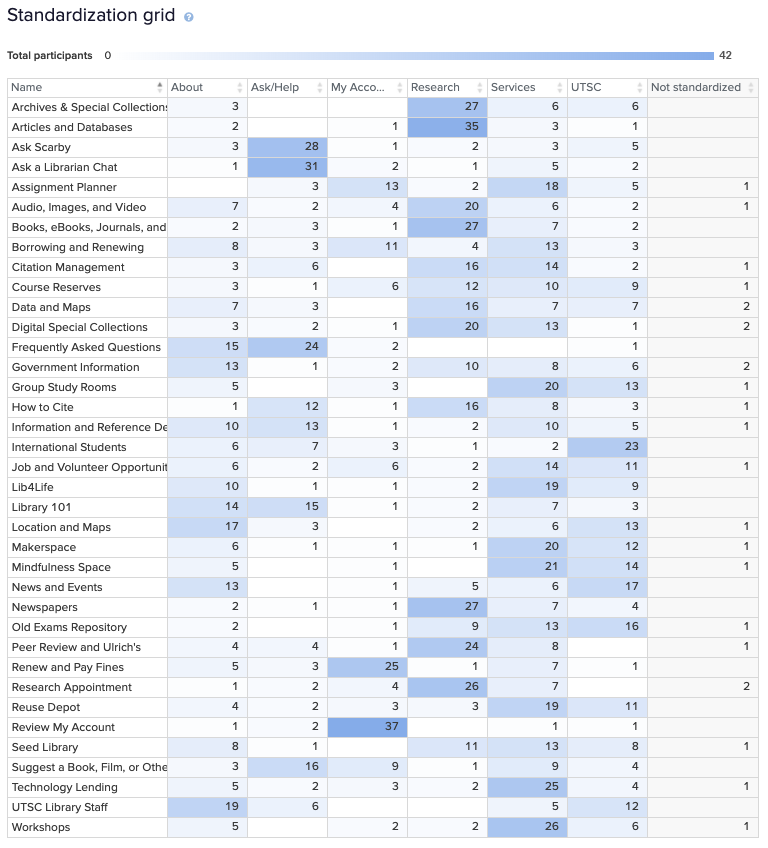

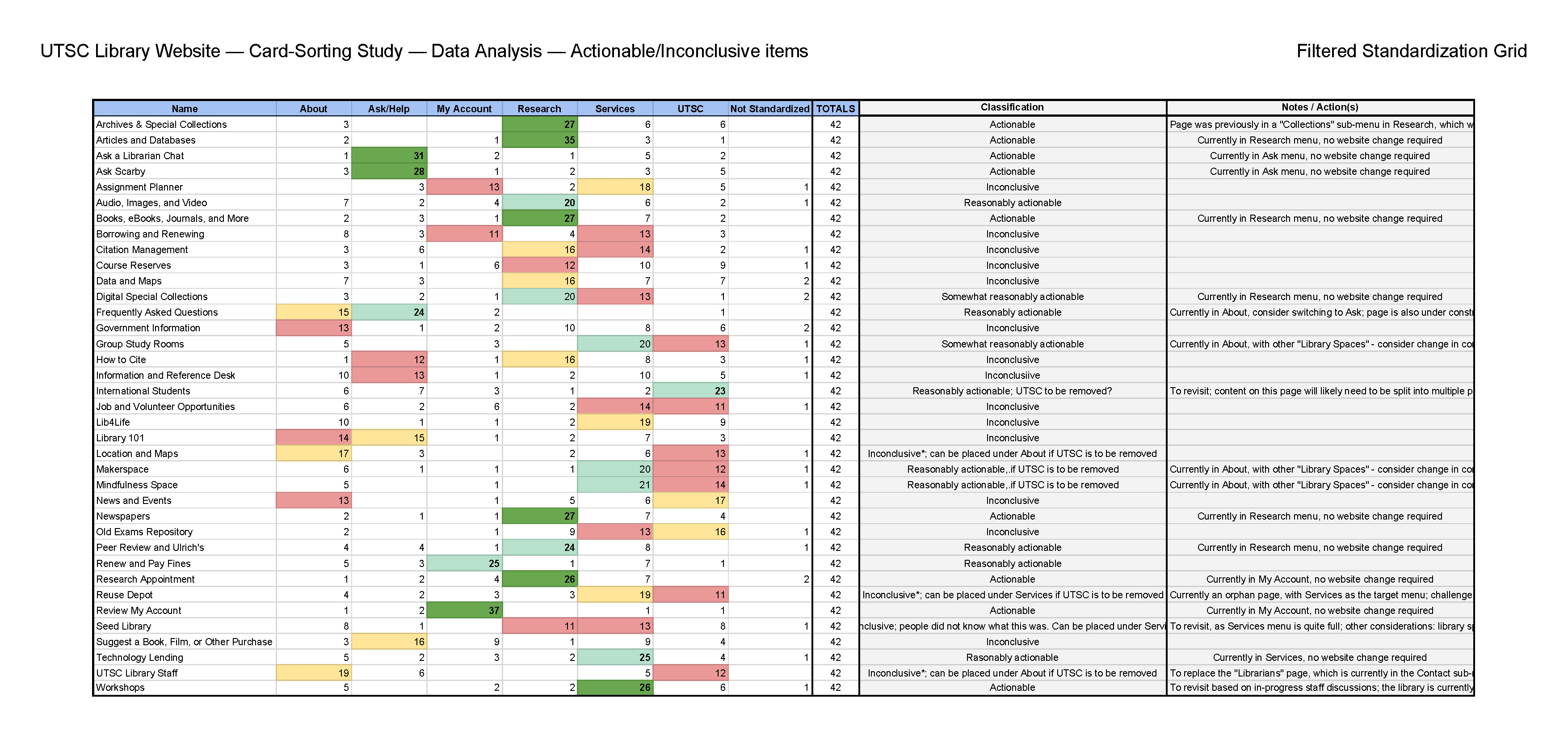

However, both phases identified persistently problematic areas—or, "inconclusives". This necessitated Phase 3, with the following goals and objectives:

- Test whether changes made during Phase 1 & 2 were effective.

- Gain clarity on “Inconclusives” from Phase 1 & 2.

- Gather evidence on where best to place new web pages.

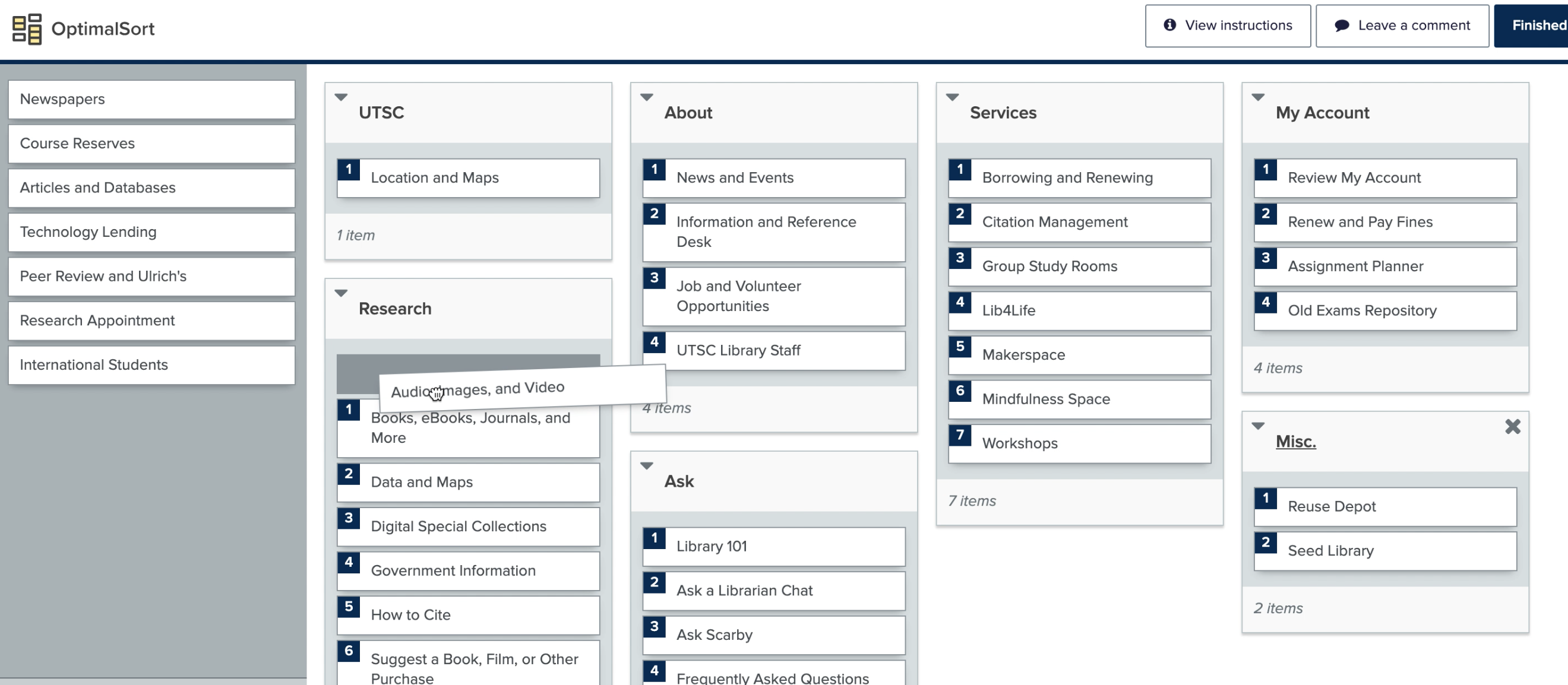

- Focus on card-sorting > task-based usability testing.